I am not a developer. But learning how to use ImportXML has been one of the most interesting things I’ve done in the past few weeks.

If you haven’t heard of ImportXML yet, or just haven’t got around to using it, you need to learn it now.

Why?

– It’s not very hard

– It’s free and awesome

– It will save you tons of time if you do any work online and make you very happy! Yaaay

Assuming you work in the internet field, learning to code, even at a beginner/intermediate level, is a very valuable skill to have. You might never be the one writing complicated programs for your company, but you will understand what goes into it and how it works. Plus you’ll be able to create smaller “agile” tools that can make your life easier.

Pro tip: Learning a skill or theory is great, but applying it directly to one of your problems is the secret. It’s great to learn how to show all the dog breads in Gdocs using ImportXML, but you will most likely forget all the details of how it works once your done if you aren’t using it in your day-to-day activities.

Let’s get started..

ImportXML is a functionality inside Google Docs that allows you to make calls or queries out on the internet, without ever leaving the spreadsheet.

Lots of SEOs have written posts on how to build agile tools to get SEO work done, but ImportXML isn’t bias to the SEO world, you can do almost anything you can imagine with it.

Note : I’ve made a reference list at the end of this post for more on ImportXML with SEO

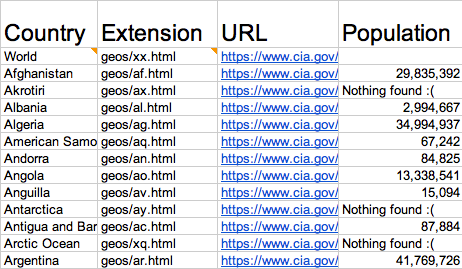

So let’s dig in… I’m going to show you how I helped my wife save hours for an assignment her boss wanted: Build a spreadsheet with every country, their population, GDP, & other data from multiple data sources online

Tasks

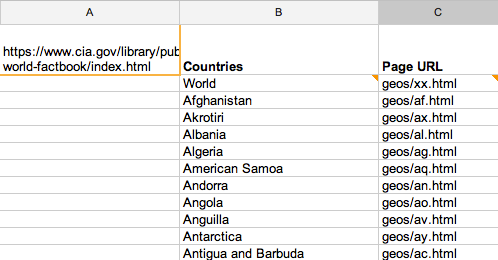

– Create a list of every country from CIA’s World Factbook.

– Get the page URL extension

– Get the full page URL

– Get the population

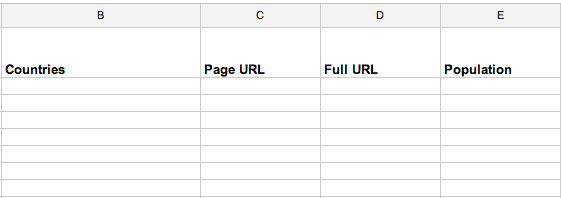

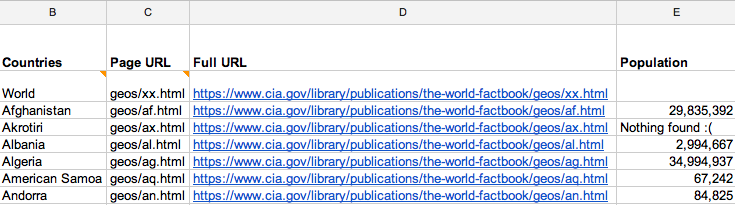

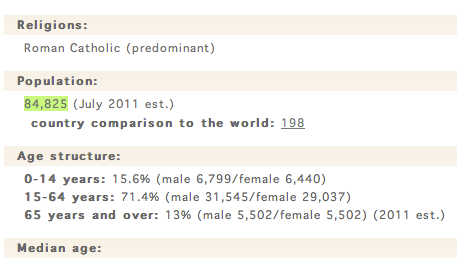

So we’re starting with this :

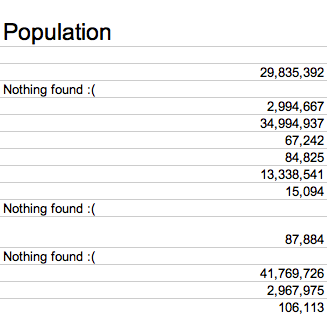

And need it to look like this :

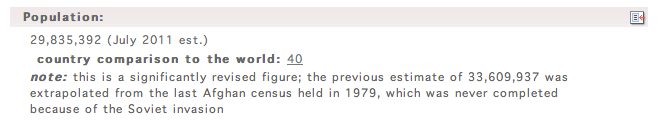

She was going to do it by searching manually for this field

on 300+ pages, then copying all the information by hand!

Which made me say noooooooooooo!

… but I bet this kid would love it

So the fist step once you’re in Google Docs is to add the URL you’ll be using to cell A1. That way you can easily call on that URL in the ImportXML query with (A1,xxx) instead of (http://theurlIwant.com,xxx)

The first function we’re going to do with ImportXML is

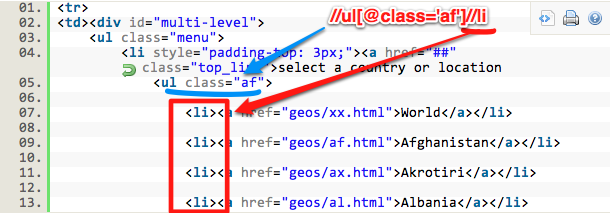

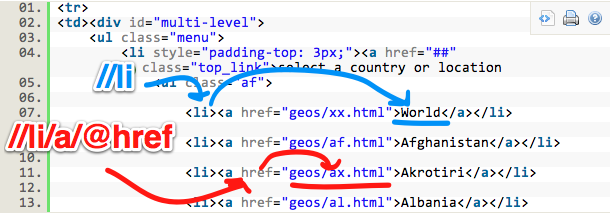

=ImportXML(A1,”//ul[@class=’af’]//li”)

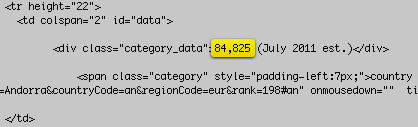

So how did I come up with that? Well you need to look at the data you need on the page then find it in the source code.

So this

equals this in HTML

[sourcecode language=’html’]

14 Replies to “[Tutorial] Learn ImportXML – Best SEO Tool Ever”

Comments are closed.

=ImportXML(A1,”//ul[@class=’af’]//li”) -> tried everything but I alawys get an #ERROR!

You know why?

That query is designed to work specifically with the example URL in the tutorial above (ie. the CIA world book website), which uses class markups in their html. That’s why I used [@class=’af’] to target a specific part on the page, then search the //li inside of it.

If you are using a different URL (reference in cell A1) it most likely won’t work, unless that page is also using classes and has an “af” class in the html.

Let me know what you’re trying to pull and from where and hopefully I can help out with more specifics.

Hi Jordan, thanks for the nice tutorial! Very useful!

However the cases are two: I’m either wrong or you made a little mistake. In particular, when explaining the =CONCATENATE part you refer to the “D” column, probably meaning the “C” one. Do you? Otherwise I don’t think it makes any sense. But, again, it’s possibly me who has not understood something of the process.

Thank you again!

Peace.

In other similar cases, the Xpath # does work but you need to use index outside of importXML

For the problem I’m doing, this formula =index(importxml(A2,”//div[@class=’description’]//p[1]”)) returns me the 1rst p in each div with the class of description. With [2] it returns me the second one, and so on..

In your example, the information you need is within the div attributes so I don’t know how that works without the script Chris wrote you.

Hi! Thanks for the wonderful article but unfortunately I am still having a problem with my project. I need to extract the “AP” information from the following document http://img824.imageshack.us/img824/3640/xpath.jpg

. I will appreciate your help! Thanks

Awesome tutorial. The only problem I have is that when the spreadsheet refreshes, it runs the formula again, and that time it does not work. I think this might have to do with the fact that the values that I am referencing update every few minutes – though my URL does not change and the class names do not change so I’m not sure why it breaks when it refreshes. Any thoughts?

for reference, the page I am pulling data from is a search on socialmention.com such as:

http://www.socialmention.com/search?q=seo&t=all&btnG=Search

hi

hi.. compliments for your job

can you tall me how i can extract the image url ?

importxml(a1,//img@src) dont work

can you help me?

I want to use importxml in a google spreadsheet to collect google search results for different queries in a list. I got it working, but only as far as returning local results. I need to collecte the same search results as when I do a google search manually with geosurf to another coubtry, e.g. Denmark going to google.dk. When you search from google.dk the number of results with geosurf are different than google.dk without geosurf. I am trying to do it with a VPN connection, but I still only get the results that correspond to non-geosurf.

So it is starting to look like the url goes out from the server, not the client that is on the VPN.

Can you help me understand how the url is send out, and from where? Any idea how I can make it work the way I want?

Thanks

Bent

Hi Jordan,

Thanks for the great article … very useful.

For the application I had in mind I need each importxml query to put the retrieved code into a single cell. For example when tarting a bullet list at the moment it is:

– outputting to multiple cells horizontally (columns) when targeting ul

– outputting to multiple cells vertically (rows) when targeting li

Is it possible, using a gsheet formula or xpath code, to get it to output the li elements into a single cell. Ideally with a comma separator between elements? Or without a separator, as complete text would be better than multiple cells?

Thanks

Nick